What Is a Stacked Camera Sensor and How Does It Work?

Most smartphones feature a camera island thicker than the rest of their bodies. However, even counting that additional bump, they’re thinner and take photos and videos that look better than their counterparts a few years ago.

Sign up forfree

Forgot your password?

Create an account

*Required: 8 chars, 1 capital letter, 1 number

By continuing, you agree to thePrivacy PolicyandTerms of Use.You also agree to receive our newsletters, you can opt-out any time.

Understanding Digital Photography

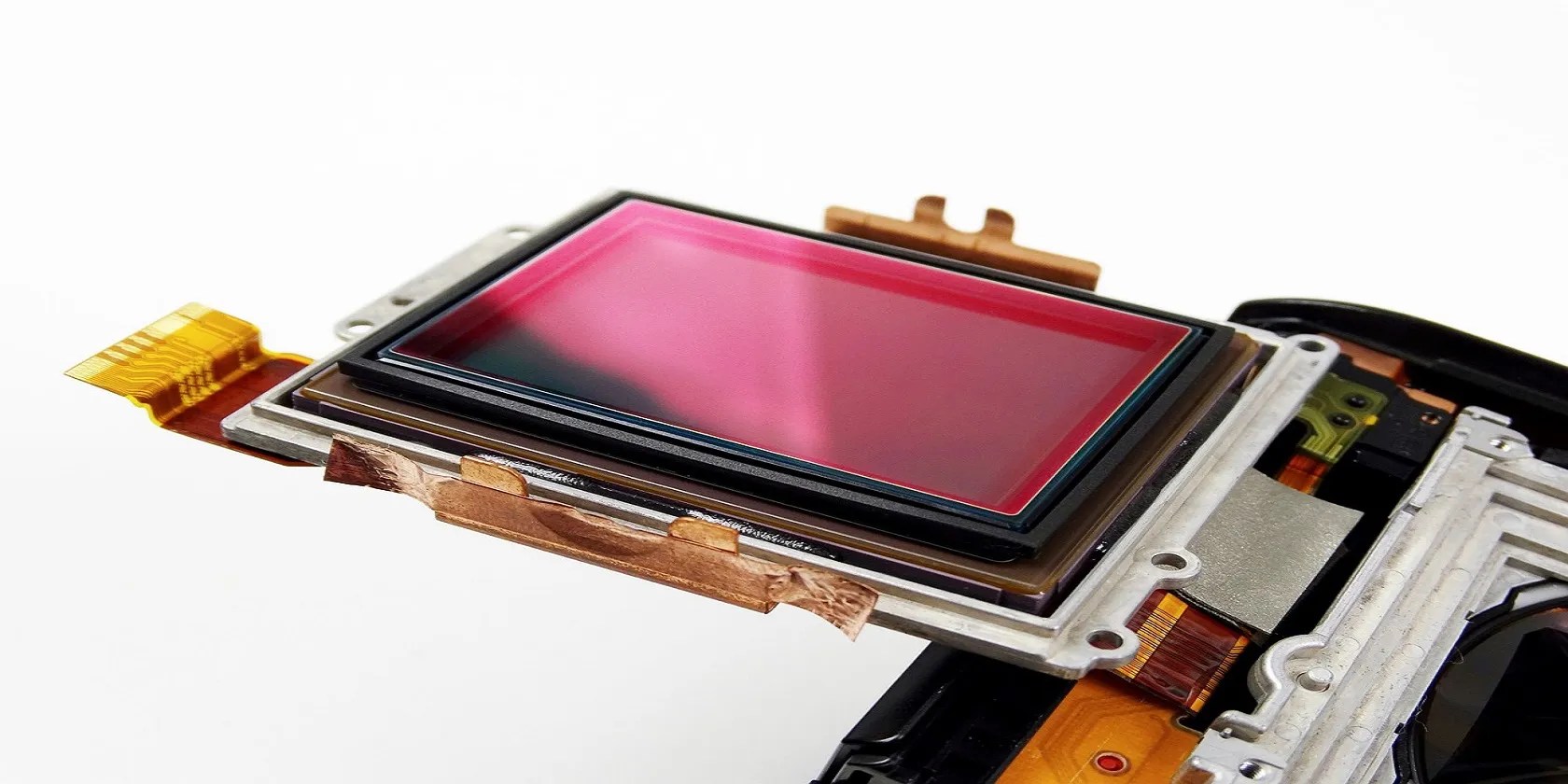

Thedifference between analog and digital camerasis that the former uses a film made of a photo-sensitive material to record pictures, while the latter has an electronic sensor. In that sensor, each pixel (individual points that form a digital image) is lighting information captured by a very tiny part of the sensor (one for each pixel in the photo).

There are twotypes of digital camera sensors, CCD (an acronym for Charge-Coupled Device) and CMOS (Complementary Metal-Oxide Semiconductor). All modern smartphone cameras use the latter, so that’s the tech we’ll explain below.

A CMOS sensor consists of a few elements. The photodiode is the most important one: it generates an electric signal when it receives light. That signal is stored by atransistorright next to the photodiode, which translates the signal into digital information and sends it to an electronic circuit.

That circuit is responsible for interpreting that data and passing it, along with the billions of other pixels, to the Image Signal Processor (ISP) that creates the final picture.

The Early Days of Phone Cameras

Until 2008, CMOS sensors had a serious issue: the wiring needed to send pixel information to the ISP passed between the photodiode and the lens, blocking some of the light. The same structure was used for CCD sensors, which were more light-sensitive, but for CMOS, that meant darker, noisier, and blurrier photos.

That was solved with a simple idea: moving the photodiode above the wires so it receives more light, therefore improving picture quality. That’s called a Back-Side Illuminated (BSI) sensor, as opposed to previous ones that were Front-Side Illuminated.

To put things in context, the iPhone 4, which started Apple’s reputation in smartphone photography, was among the first phones to use this type of sensor. These days, virtually all smartphone cameras use BSI sensors.

Stacked Sensors Improve Photo Quality and Reducing Size

Even after removing the wire, there were still points to improve in CMOS sensors. One of them was the circuitry responsible for processing the transistor information. It wrapped around the photodiode. Because of that, about half of the light that reached each pixel ended up in a part of the sensor that didn’t capture any light.

In 2012, the first stacked CMOS sensor was created. Instead of wrapping around the photodiode, the circuitry is placed below it. Since it (partially) takes the place of a substrate used for structural rigidity, there’s no added thickness. In fact, since then, improvements in the stacking process, both by Sony and other manufacturers that adopted the technology, resulted in thinner sensors, which enabled thinner phones.

What About Even More Stacking?

By moving the circuitry below the photodiode, one would think the top layer would be occupied solely by the light-capturing part, right? Wrong.

Remember the transistor? It sits right beside the photodiode, taking even more precious light-capturing space. The solution? More stacking!

Engineers had done it before. In 2017, Sony announced a camera sensor with RAM between the photodiode and the circuitry, enabling 960FPS super slow-motion videos. It was a matter of applying the same idea to a part of the existing sensor.

Now, the photodiode is finally in the topmost part of the sensor, and the photodiode only. This effectively doubles the signal the photodiode can capture and the transistor can store.

The most immediate effect is double the light information each pixel has to work on. And, as with everything in photography, more light means more detailed pictures.

However, since the transistor also doubles its capacity, it can better translate the electric signals from the photodiode into digital information. One of the possible applications of this is reducing image noise, further enhancing how photos look.

Stacked Sensors for a Brighter Future

While single-stacked sensors—photodiode and transistor in one layer, circuitry below it—have been around for some time, double-stacked ones (one layer for each part) are still somewhat new. They’re mostly used in professional cameras, with the first mobile phone to feature such a sensor, the Sony Xperia 1 V, released in May 2023.

That means the technology is still in its infancy. Along with several other improvements that have been made in mobile photography so far, stacked sensors mean smartphone cameras are on the path to a brighter future—or should we say a brighter picture?

Computational photography is the reason your smartphone takes such incredible images, but how does it actually work?

This small feature makes a massive difference.

Sometimes the smallest cleaning habit makes the biggest mess.

Your phone’s camera app doesn’t show this, so it’s easy to miss.

Goodbye sending links via other apps.

Your phone is a better editor than you give it credit for.